Hey there, you may know I am a developer of the SSH Library libssh. Last week, a post on the libssh mailing list was reporting a connection problem under Redhat RHEL 4.8. It seemed that the new cipher aes128-ctr, recently implemented in libssh, had a little problem…

This bug looked strange, firstly because we never ever had any cryptographic problems within libssh, secondly because the debugging did not report something broken :

[3] Set output algorithm to aes256-ctr

[3] Set input algorithm to aes256-ctr

[3] Writing on the wire a packet having 17 bytes before

[3] 17 bytes after comp + 10 padding bytes = 28 bytes packet

[3] Encrypting packet with seq num: 3, len: 32

[3] Sent SSH_MSG_SERVICE_REQUEST (service ssh-userauth)

[3] Decrypting 16 bytes

[3] Packet size decrypted: 44 (0x2c)

[3] Read a 44 bytes packet

[3] Decrypting 32 bytes

2010-04-12 13:14:54,211557; 1126189408 procSrvAuth; Did not receive SERVICE_ACCEPT

While giving on the OpenSSH side :

sshd[22341]: debug1: SSH2_MSG_NEWKEYS sent sshd[22341]: debug1: expecting SSH2_MSG_NEWKEYS sshd[22341]: debug1: SSH2_MSG_NEWKEYS received sshd[22341]: debug1: KEX done sshd[22341]: Disconnecting: Corrupted MAC on input.

What does this mean ?

libssh was sending garbage and did receive some kind of garbage (a variation of the last error showed a HMAC error). However, the “size” field of the SSH packet (the first 32 bits of every packet) was consistent with the type of packet being received. So what ?

Further analysis of the received plaintext on both openssh and libssh showed that the first 16 bytes of the first packet in each direction were correct. So, this was a bug that was affecting the whole stream excepted the first block of blocksize bytes. The code in libssh producing aes128-ctr is the following:

static void aes_ctr128_encrypt(struct crypto_struct *cipher, void *in, void *out,

unsigned long len, void *IV) {

unsigned char tmp_buffer[128/8];

unsigned int num=0;

/* Some things are special with ctr128 :

* In this case, tmp_buffer is not being used, because it is used to store temporary data

* when an encryption is made on lengths that are not multiple of blocksize.

* Same for num, which is being used to store the current offset in blocksize in CTR

* function.

*/

AES_ctr128_encrypt(in, out, len, cipher->key, IV, tmp_buffer, &num);

}

Then, how does aes-ctr work ?

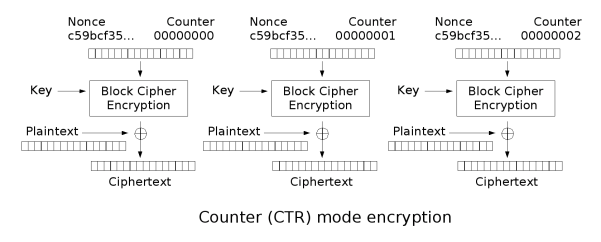

CTR is a stream cipher mode build on top of a block cipher. In SSH, it’s used like a block cipher anyway. It has two interesting characteristics:

- The same code is used for encryption and decryption, because it produces a OTP-like stream of bytes

- The key is used for the block cipher encryption and the input to the algorithm is a nounce together with a counter

That’s where things begin to be interesting. In our code, IV is used as a nounce and is generated from the cryptographic parameters during the key exchange. I have verified its initial value was consistent with the valid (working) implementation. tmp_buffer is a buffer used for internal operations of the cipher. It’s normally not important. The num variable is used to report how far we are in the encryption of the local block, in order to emulate a stream cipher. We are not using this feature (SSH always encrypts packets multiple of the blocksize), so the returned value is always 0.

So now, how goes that libssh with OpenSSL 0.9.8 on my desktop and OpenSSH on RHEL 4.8 work like a charm, and libssh with OpenSSL 0.9.7a on RHEL/CentOS 4.8 does not ?

I had to go one step further and look what could be wrong in the way I am using the AES_ctr128_encrypt function. I looked at the code of OpenSSL 0.9.8 and found this:

* increment counter (128-bit int) by 1 */

static void AES_ctr128_inc(unsigned char *counter) {

unsigned long c;

/* Grab bottom dword of counter and increment */

c = GETU32(counter + 12);

c++; c &= 0xFFFFFFFF;

[...]

This is the code used to increment the counter. And now the surprise in the sources of OpenSSL 0.9.7a :

/* increment counter (128-bit int) by 2^64 */

static void AES_ctr128_inc(unsigned char *counter) {

unsigned long c;

/* Grab 3rd dword of counter and increment */

#ifdef L_ENDIAN

c = GETU32(counter + 8);

[...]

What does that mean ? It means that the counter incrementation is not the same between the two versions of AES-CTR128 ! OpenSSL has a bad and a correct version of the implementation of AES-CTR128. You can find that the CVS commit fixing this dates back from 2003. I found that OpenSSL 0.9.7c fixes the issue. Of course, no documentation explains that difference and nothing in the header files let you know if you’re in front of a broken or working implementation (I would have expected a #define in the working version).

By studying the sources of OpenSSH, I found that they were not affected by the bug because they implemented the CTR encoding by their own. Not wanting to do this, I simply deactivated the compilation of the CTR algorithms on libssh on broken OpenSSL. Yop, “Fixed!”.

Lessons learned

These things are important when you’re debugging a cryptographic thing that produces garbage:

- Verify the input. Garbage in, garbage out

- Verify the derivative input like IV. Even a single error of one bit can change the output to garbage

- Verify the output. It’s possible that the output of the cryptographic algorithm you’re using is good, it’s just not what the other party is reading and trying to decrypt …

- Verify you’re using correctly the crypto. When the only doc you have, like with libcrypto, is the header file, then read the source.

- If all of this did not work… read the source of the crypto and find what’s the difference between the working implementation and the wrong implementation. Maybe it’s something you did not understand and used wrongly, maybe …